May 23, 2020

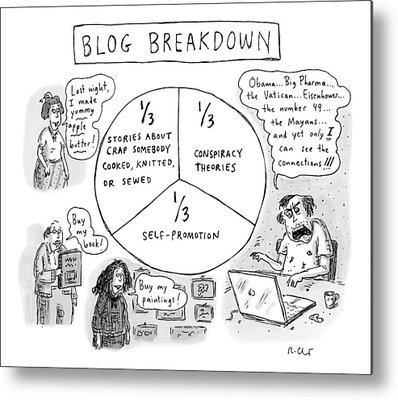

In Part I of this post, we explored how mistrust of the scientific and medical “establishments” has led significant numbers of people worldwide to invest in easily disproven conspiracy theories (see Fig. II.1), ranging from the apparently harmless (fake Moon landing) to the potentially fatal (belief in quack cancer cures). While conspiracy theorists and true believers have been around throughout human history, research attempting to understand the motivations, psychology, thought processes and brain function of the believers is a relatively new field. The research has been spurred in part by early social science investigations that suggested the existence of a generalized tendency to believe in conspiracies, i.e., “people who believed in one conspiracy were more likely to also believe in others.” The research has blossomed particularly during the past decade, encompassing studies in sociology, experimental psychology, political science, information sciences, brain imaging and neurochemistry. Much of the relevant research has been recently reviewed and summarized by Douglas, et al.

In the ensuing subsections we will review some of the research findings on the social motivations of true believers, the mechanisms for the reinforcement and spread of their beliefs, and what is known about their psychology and brain function. We will conclude with a brief discourse on the dangers of conspiracy belief, and why it behooves us to understand better the believers’ mindset.

II.1 Social Motivations of Conspiracy Theory True Believers

A conspiracy theory that rejects science offers its proponents a path to avoid a reality, or an explanation of reality, that does not appeal to them, for a variety of reasons. Often the conspiracy belief results from motivated reasoning. As Douglas et al. put it: “People resort to motivated reasoning when they are presented with facts that contradict their predispositions, and they will interpret new information in such a way as to not disturb their previously held worldviews…The literature strongly suggests that conspiracy theories must align with a person’s existing set of predispositions to be adopted.”

There is a considerable body of research covering such motivated reasoning conspiracies that align with people’s political partisanship. For three recent examples, see Edelson, et al. (2017) on belief in election fraud, Enders and Smallpage (2018) on partisan American conspiracy theories, and Uscinski, et al. (2020) on belief in COVID-19 conspiracy theories. The alleged climate change “hoax” and belief that the threat of COVID-19 has been greatly exaggerated are two prime examples of science denial conspiracies whose believers seem largely driven by motivated partisan reasoning.

The worldview protected by motivated reasoning need not be explicitly political or ideological. Some people have a conspiratorial worldview, i.e., a psychological predisposition to view major events as the product of conspiracy theories. Such a conspiratorial outlook often results from feelings of uncertainty and powerlessness in the face of overwhelming, complex events (e.g., a school shooting or a global pandemic), or from existential anxiety. Dannagal Young, a professor of communication and political science, has recently explained her own dabbling in conspiracy theories to focus blame for the development of a brain tumor that ultimately took her young husband’s life. She puts it this way:

“Under conditions of uncertainty, information that helps direct our negative emotions toward a target is psychologically comforting. When we feel powerless in a situation that is both complex and overwhelming, the identification of people and institutions to ‘blame’ feels good to us.

This explains not only families lashing out when their loved ones are ill, but also the appeal of conspiracy theories more broadly. Especially in the context of ambiguous and terrible events like 9/11 or the Sandy Hook shooting, conspiracy theories increase perceptions of control. Such narratives typically point to the existence of secret plots by powerful actors working behind the scenes, either to cause the horrible chaos or to fabricate it. The anger we then feel toward these “powerful actors” is accompanied by a feeling of efficacy (confidence in one’s ability to effectively navigate the world), hence increasing the likelihood that we will take action — by engaging in political participation, protest, or, in the case of a loved one’s medical situation, maybe filing a lawsuit.”

A conspiratorial worldview is also often shared by people or groups who feel themselves to be undervalued, underprivileged or under threat. At an individual level, belief in conspiracy theories is often associated with narcissism, an exaggerated self-view in need of constant external validation. Conspiracy theories may fill a narcissistic need for a feeling of uniqueness, allowing the believer to feel in possession of rare information that most others lack the insight or intellect to perceive. But there is also collective narcissism, which allows members of a group to justify their own misplaced sense of their own superiority by using conspiracy theories to denigrate non-members who fail to appreciate or acknowledge their greatness.

The conspiracy theories often allow low-status groups to account for their disadvantaged position, but the outlook does not necessarily disappear when elevated status is achieved. In the U.S., for example, we have seen that even after the election of President Donald Trump, he and his media allies have continued to cultivate a sense of continuing victimhood among his supporters, so that they maintain belief that “elites” are still conspiring to “control them,” whether by election fraud, by investigative “hoaxes,” or by assaulting their freedoms with shelter-at-home orders allegedly intended to mitigate the spread of a purposely over-exaggerated pandemic.

Because the people who try to provide more carefully and coherently reasoned explanations for events and processes are often “experts” of one sort or another – especially academics and scientists – the conspiratorial outlook is often coupled to rejection and resentment of expert information, and particularly to science denial. Thus, medical research that fails to reveal any evidence of a link between vaccines and autism, or that demonstrates coronavirus features arguing against a man-made virus engineered in a Chinese laboratory, is too complicated and untrustworthy to replace a simple conspiratorial explanation that gives believers a sense of control and directed anger. Scientific proofs of the Moon landing or of the roundness and rotation of the Earth get in the way of conspiracy believers’ sense of unique insight, and thus are to be rejected. The overwhelming scientific consensus regarding evidence for ongoing anthropogenic global warming must be judged a vast conspiracy if it conflicts with your political tribe’s party line.

II.2 The Spread of Conspiracy Theories

It is not clear that conspiracy theories are more widespread today than they have been through much of human history. But it is clear that modern media have facilitated the promulgation, reinforcement and spread of conspiracy theories through audiences predisposed to believe in them. Furthermore, these media have fueled widespread political exploitation of conspiracy theories. The internet and social media have made it easier than ever to fulfill a need felt by most conspiracy believers to be part of a community, or echo chamber, of like-minded believers. The internet and social media are fulfilling this need in at least three ways: (1) they foster the formation and maintenance of distinct and polarized online communities; (2) they offer a direct path, free of middlemen, for mis-leaders – producers of conspiratorial narratives – to transmit “talking points” to willing consumers of misinformation; and (3) they allow the rapid organization of massive actions and protests by members of a polarized community.

There are many websites and focus groups devoted to conspiracy theories of one sort or another (see Fig. II.2), just as there are many, like this one, dedicated to sharing research-based information. But there is little evidence of cross-fertilization in the readership of the two classes of sites. Seekers of conspiracy theory sites are largely those already predisposed to believe in massive conspiracies. Bessi, et al. have analyzed the Facebook activities of nearly 800,000 Italian users who frequent primarily conspiracy pages and groups spanning four distinct categories: geopolitics, environment, health and diet. Each of these users attached at least 95% of their total number of “likes” to conspiracy pages. The authors also analyzed the mobility of these users across the four conspiracy categories, and found that the more engaged a user was (i.e., the more total “likes” they had registered), the more likely that engagement was to cross conspiracy categories. The conspiratorial mindset often seeks out new conspiracy theories to adopt in order to reinforce a consistent worldview. For example, many new believers in flat Earth theories admit that they first learned and adopted the false narratives from YouTube videos that turned up when they were seeking information on other conspiracy theories. The internet offers bottomless rabbit holes to get lost in.

The polarization of online communities reinforces confirmation bias. Information that supports or appears to validate a prejudice or self-deception is accepted rather uncritically, while information that refutes one’s beliefs or wishful thinking is mistrusted and most often rejected. This is reflected not only in the internet and social media search behavior of conspiracy believers, but also in the way they communicate among themselves and, occasionally, with non-believers. For example, Bessi, et al. also found that conspiracy users tended to uncritically endorse and distribute within their online communities even deliberately false, highly implausible Facebook posts that had been made to parody conspiracy theories, as long as those posts reinforced the conspiratorial worldview.

Occasionally, controversial news stories or online posts attract a high volume of comments from both conspiracy proponents and supporters of the “official” accounts. These occasions have afforded some researchers an opportunity to compare the communication styles of the two groups. Wood and Douglas analyzed back-and-forth comments from 9/11 “truthers” (people who believe the U.S. was involved in staging the apparent terrorist attacks at the World Trade Center and the Pentagon) and their opponents on several mainstream news sites. They found that the conspiracy proponents spent most of their time poking holes in the official explanation, rather than offering coherent alternative theories. The anti-conspiracy commenters devoted most of their attention to advocating for the official explanation, rather than arguing against the conspiracy theory. Faasse, Chatman and Martin analyzed the language used by two sides among 1500 comments made to a Mark Zuckerberg Facebook post about vaccinations, and found that anti-vaxxers tended to use more authoritative, confident, assured and manipulative language than the commenters who supported vaccination programs. This reflects the Dunning-Kruger effect we discussed in Part I of this post, the misplaced, uncritical confidence that accompanies belief in an unfalsifiable viewpoint.

In addition to online media, more traditional media – and especially cable TV news shows and political talk radio – also play a critical role in the spread, particularly in the political manipulation, of conspiracy theories. In Part I of this post, we highlighted the important role played by a 2001 Fox News documentary in spreading the fake Moon landing “hoax.” In our post on Science Denial and the Coronavirus, we analyzed the roles of various media outlets and personalities in promulgating false narratives about COVID-19. As we write this, a new Yahoo News/YouGov poll (see Fig. II.3) reveals the strong correlation of political preference and choice of news source with belief in a new, baseless conspiracy theory that Bill Gates is funding the development of a COVID-19 vaccine in order to implant microchips in people to track their whereabouts. (Apparently, believers in this theory don’t realize that their cell phones can already accomplish that task quite effectively.)

In our posts on Wellness Fads we reported on the many times TV personality Dr. Oz has used his popular platform to champion “snake-oil” cures with no medical research backing. These, and many other, unreliable narrators are out to improve their own bottom lines, and they are succeeding because there is an audience hungry for misinformation that will validate their own wishful thinking. Are these seekers simply representative of the normal human need to confirm preconceived notions? Or do their minds actually work in distinct ways from those of people who weigh evidence carefully and do not uniformly distrust experts?

II.3 The Psychology of Conspiracy Believers

A considerable number of psychological experiments probing the mentality and cognitive biases of conspiracy believers have been summarized in the recent review by Douglas, et al. A few basic themes emerge from these studies. Groups who are more prone to conspiracy belief include: (a) people who perceive patterns in randomness (so-called illusory pattern perception); (b) people who consistently seek patterns and meaning in their environment, but tend to attribute agency and intentionality where it does not, or is unlikely, to exist (hypersensitive agency detection) – including believers in paranormal and supernatural phenomena; (c) people who overestimate their ability to understand complex causal phenomena. As summarized by Douglas, et al.: “Overall, there is evidence that conspiracy theories appear to appeal to individuals who seek accuracy and/or meaning, but perhaps lack the cognitive tools or experience problems that prevent them from being able to find accuracy and meaning via other more rational means.”

Illusory pattern perception (see Fig. II.4) was probed in a series of five psychological experiments reported by van Prooijen, Douglas and De Inocencio in their 2017 paper, entitled “Connecting the Dots: Illusory Pattern Perception Predicts Belief in Conspiracies and the Supernatural.” All of their studies supported the conclusion that belief in conspiracies and the supernatural was strongest among subjects who “mistakenly perceive randomly generated stimuli [for example, drawn from coin toss outcomes or Jackson Pollock paintings] as causally determined through a nonrandom process, and hence as diagnostic for what future stimuli to expect.”

Pattern recognition is a critical function of the human brain developed through millions of years of evolution to enhance threat avoidance. But different people have different levels of ability in distinguishing meaningful from likely meaningless patterns. In the following subsection, we will discuss possible neurochemical origins of these differences. Part of the problem, as van Prooijen, et al. point out is that “truly random sequences typically display less variation—and hence form more clusters—than people intuitively expect, creating the feeling of meaningful patterns that in fact occurred at random… As a result, it is difficult for people to appreciate the role of coincidence in generating these pattern-like sequences.”

This difficulty applies also to pattern perception among world events: “acceptance of a conspiracy theory implies an increase in the extent to which people perceive patterns in world events, as reflected in the belief that instead of being a coincidence, many events that happen in the world are somehow causally related. This perception of patterns in world events is associated with other, unrelated irrational beliefs.” Indeed, other research has demonstrated that people who perceive such nefarious patterns are prone to believe even in mutually contradictory conspiracy theories. For example: “the more participants believed that Princess Diana faked her own death, the more they believed that she was murdered… the more participants believed that Osama Bin Laden was already dead when U.S. special forces raided his compound in Pakistan, the more they believed he is still alive.”

This aspect of conspiracy mentality is ironic, because one of the standard narratives pushed by mis-leaders about climate change is that “correlation is not proof of causation.” And yet many of the believers who buy into this narrative seem to consider correlations they perceive where there aren’t any as proof of causation regarding the existence of a massive worldwide climate change “hoax.”

Hypersensitive agency detection (“Someone is pulling the strings”) is a cognitive bias related to illusory pattern perception. It has been measured, for example, by asking study subjects to rate the intentionality, purposefulness and conscious decision-making of simple shapes moving around a screen and in and out of an opening and closing rectangular box in a brief animation. Douglas, et al. asked the same subjects to complete questionnaires that provided demographic data and also that revealed their tendency to anthropomorphize inanimate objects (e.g., “To what extent does the average mountain have free will?” or “To what extent does the environment experience emotions?”), the extent of their belief in a set of well-known conspiracy theories (e.g., “Scientists are creating panic about climate change because it is in their interests to do so” or “The attack on the Twin Towers was not a terrorist action but a governmental plot“), and the extent of their belief in paranormal phenomena (e.g., “Some people can make me aware of them just by thinking about me” or “I have sometimes felt that strangers were reading my mind”).

The correlations that resulted from the above study revealed that subjects who were more likely to attribute agency and intentionality to inanimate objects were also more likely to endorse conspiratorial explanations for well-known events. Furthermore, “in the current studies individuals with higher levels of education were less likely to see intentionality everywhere and less likely to believe in conspiracy theories.” The latter finding is also consistent with other research suggesting that “people with high education levels, or with strong analytic thinking skills, are less susceptible to irrational beliefs than people with low education levels or weak analytic thinking skills.”

Yet another manifestation of analytical thinking deficits has been measured by “bullshit receptivity,” a tendency to see profundity in meaningless statements composed of fancy words and constructed following rules of grammar and syntax (e.g., “Wholeness quiets infinite phenomena” and “Imagination is inside exponential space time events”). The statements used in the bullshit receptivity scale proposed by Pennycook, et al., have been constructed from buzzwords appearing in tweets from Deepak Chopra, or sometimes just taken without modification from Chopra tweets. Application of this scale to test subjects established that people who have high bullshit receptivity are more likely to believe in conspiracy theories.

Vitriol and Marsh have explored how the illusion of explanatory depth – inflated confidence in one’s causal understanding of phenomena – is correlated with endorsement of conspiracy theories, specifically in the political domain. Participants in their study were asked to rate how well they understood six political policies, and then asked to provide as detailed an explanation as they could for how those policies actually worked. “Those who overestimated their knowledge were more likely to believe conspiracies like the U.S. government intentionally created AIDS or that Princess Diana’s death was not an accident but rather an assassination.” This is a case where correlation does not necessarily imply causation. The exaggerated over-confidence in one’s insight and depth of understanding is a Dunning-Kruger characteristic of conspiracy believers, a characteristic reinforced by the constructed unfalsifiability of the conspiracy beliefs. This raises doubts about the authors’ optimistic suggestion that “it is possible that exposing this illusion of understanding the political domain may be an effective means for reducing conspiratorial thinking and even political misperceptions.”

The cognitive deficits and biases of conspiracy believers appear to lie along a continuum of illusory thinking that is also found in more serious personality disorders, such as narcissism, nonclinical delusional thinking, paranoia and schizotypy – a tendency to exhibit mild symptoms of schizophrenia. In a blog on the Psychology Today site, Dr. Joe Pierre explains some of the distinctions along this spectrum in this way: “The key difference between delusions and conspiracy theories is that delusions are by definition false and not shared by others. They often have a self-referential aspect, meaning that the believer is part of the delusional story. Conspiracy theories, on the other hand, are by and large shared beliefs that usually lack a self-referential quality. And of course, also unlike delusions, sometimes conspiracy theories turn out to be true.” Similarly, psychological research has established a clear overlap between conspiracy believers and those with subclinical paranoia, but has also delineated differences in their manifestations: “Whereas paranoid people believe that virtually everyone is after specifically them, conspiracy believers think that a few powerful people are after virtually everyone.”

A recent integrative study testing the independent contributions of various cognitive quirks to conspiratorial thinking has “found that schizotypy, dangerous-world beliefs, and bullshit receptivity independently and additively predict endorsement of generic (i.e., nonpartisan) conspiracy beliefs.” The current version of the Diagnostic and Statistical Manual of Mental Disorders (DSM-5) lists the symptoms of schizotypal personality disorder as including: discomfort in social situations; odd beliefs, fantasies or preoccupations; odd behavior or appearance; odd speech; difficulty making/keeping friendships; inappropriate display of feelings; and suspiciousness or paranoia. It represents “an ingrained pattern of thinking and behavior marked by unusual beliefs and fears,” and the same may be said of those who believe strongly in several science denial conspiracy theories. Indeed, some sufferers along the schizotypy spectrum may find participation in online communities of like-minded conspiracy theists an effective technique for establishing human connections.

II.4 The Role of Dopamine

The neurochemical link among behaviors along the schizotypy spectrum may be abnormalities in dopamine activity in the human brain. Dopamine is a neurotransmitter, one of several chemicals that facilitate communication across synaptic gaps between sending and receiving neurons. Dopamine activity is illustrated schematically in Fig. II.5. Dopamine is released from sending neurons very rapidly – within 70—100 milliseconds, faster than one can “feel” an emotion – in response to stimuli that carry the promise of a reward. Such a spiky dopamine release is the neural system’s way of alerting the brain to a salient stimulus, one that promises to gain “good stuff” for the mind and avoid “bad stuff.”

Dopamine activity is central to the brain’s learning about which stimuli deliver rewards reliably and which do not. According to W. Schultz: “Most dopamine neurons in the midbrain of humans, monkeys, and rodents signal a reward prediction error; they are activated by more reward than predicted (positive prediction error), remain at baseline activity for fully predicted rewards, and show depressed activity with less reward than predicted (negative prediction error). The dopamine signal increases nonlinearly with reward value and codes formal economic utility. Drugs of addiction generate, hijack, and amplify the dopamine reward signal and induce exaggerated, uncontrolled dopamine effects on neuronal plasticity.”

A Harvard website concerning dopamine release associated with obsessive smartphone usage continues the theme of dopamine-activity reward prediction errors, centered on the schematic timeline of dopamine spikes shown in Fig. II.6. “These prediction errors serve as dopamine-mediated feedback signals in our brains…as negative outcomes accumulate, the loss of dopamine activity encourages us to disengage. Thus, a balance between positive and negative outcomes must be maintained in order to keep our brains engaged.” Excessive dopamine activity may thus hinder such learning, and keep the brain over-engaged in processing stimuli that deliver no reliable reward in reality.

Dopamine activity thus appears to be central to the brain’s ability to distinguish meaningful from meaningless information, and to update beliefs accordingly. This hypothesis has been tested recently in research by Nour, et al., who performed functional MRI brain scans on test subjects who were exposed to a series of meaningful and meaningless visual and audial cues – cues that either had predictive content for future monetary reward or rather random impact on monetary reward. From those MRI scans, they showed that the ability to update beliefs based on meaningful new information, and to distinguish such information from meaningless information, was related to activity encoded in the midbrain and ventral striatum sections of the brain. From PET scans on the same subjects, they found that the ability to sort meaningful from meaningless information, and to update beliefs accordingly, decreased among those subjects whose PET scans revealed higher dopamine release and reception capacity.

In other words, excessive dopamine made subjects more likely to see significance in random information. The high-dopamine subjects also showed more tendency toward “subclinical paranoid ideation.” Nour, et al. conclude: “The findings provide direct evidence implicating dopamine in model-based belief updating in humans and have implications for understanding the pathophysiology of psychotic disorders where dopamine function is disrupted.”

The mentalhealthdaily site summarizes research findings on the role of dopamine abnormalities in schizotypal personality disorders and psychoses:

“Those experiencing paranoia tend to have higher levels of extracellular dopamine in the brain. Those with conditions like paranoid schizophrenia and paranoid personality disorder tend to also have problems with the number of dopaminergic receptors. The paranoia can often be mitigated with drugs that decrease dopamine. Even those without psychiatric conditions can experience paranoia as a byproduct of using certain drugs for the dopamine boost.”

“At an extreme, excess dopamine is associated with delusions among those with schizophrenia and even those without any mental illness. With too much dopamine, a person may become mistrusting of others and come to experience false beliefs and perceptions that have no logical basis in reality. Delusions of ‘grandeur’ may be provoked with extremely high levels of dopamine.”

It thus appears likely from neurochemical research that excessive, though not necessarily extreme, dopamine activity in the brain may be a contributor to several of the cognitive quirks – attaching salience to too many stimuli, sensing patterns and agency where they are highly unlikely to exist – that characterize true believers in massive, utterly improbable, conspiracy theories that defy established scientific consensus.

II.5 The Dangers of Conspiracy Belief

The 19th century American humorist Josh Billings once said: “It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.” And so it is with science-denial conspiracy theories. The true believers pose risks not just to themselves and their families, but to their communities, their nations, and even to the world.

Anti-vaxxers may be driven by individual feelings of powerlessness, disillusionment and mistrust of authorities, but when they refuse to allow their children to be vaccinated against common diseases, they threaten the herd immunity that has helped to strongly suppress those diseases in the past. People who buy into the rampant COVID-19 “hoax” conspiracies, being promoted for partisan reasons, behave in ways that impede effective mitigation for their communities, and often become carriers of the highly contagious disease themselves. Those who spread misinformation about alleged climate change “hoaxes” erect political barriers against the adoption of measures that might mitigate the global impacts of a very real natural threat. Nature and viruses don’t care about people’s beliefs; they impact realists and conspiracy theists indiscriminately. Psychic investments in “alternative realities” offer little protection when actual reality comes to bite, as Donald Trump is learning the hard way from massive U.S. COVID-19 deaths.

In our current age of misinformation, when the internet, social media and cable news channels offer infinite reservoirs of confirmation bias, conspiracy theories are exacerbating social polarization and a declining trust in government. These features, in turn, are fueling a current worldwide wave of disruptive politics, in which corrupt and cynical politicians, with the aid of media allies, are willing to launch, promote and exploit conspiracy theories to gain and preserve political power, even if those theories complicate dealing with reality. Conspiracy theists provide their cult-like political base. And some members of that base appear willing to employ violence in the protection of their cherished conspiracy theories.

Scientists are not the “enemy” on these issues. The vast majority of worldwide scientists are devoted to characterize and understand nature as it is, and to project natural developments as far as possible. They are driven to uncover flaws in the thinking not just of the public and politicians, but of other scientists as well. Their competitive nature makes them the unlikeliest perpetrators of massive conspiracies or of the suppression of medical breakthroughs. Warnings of severe natural threats – pandemics and global climate change – that emerge from broad scientific consensus are ignored at great risk to humanity.

Despite these very real risks, overcoming widespread conspiracy belief is a daunting challenge. The Dunning-Kruger effect and the unfalsifiability defense mechanism adopted by many conspiracy believers limit the effectiveness of many intervention strategies. Attempts to reason on a factual basis usually backfire, because they are judged to be further “proof” of the conspiracy. Some success has been achieved in controlled situations by “inoculating” people with counter-conspiracy information before they are exposed to an anti-vaccine conspiracy theory. We are also beginning to see examples of conversions of COVID-19 “hoaxers” from their hospital beds, or of climate change “hoaxers” who “see the light” after personal exposure to severe climate impacts. But waiting for reality to take sufficiently hard bites out of significant numbers of conspiracy believers engenders an awful lot of risk to the rest of us still willing to invest trust in experts.

The most promising mitigation strategy to reduce conspiracy belief is the long-term one of education, of training young people to engage in critical thinking and to recognize flawed, illogical and deliberately misleading arguments, hopefully before they are exposed to too many such arguments. That is the primary motivation for this blog site. It is also the motivation that has led two faculty members at the University of Washington to develop a new course entitled “Calling Bullshit.” For them, “Bullshit involves language, statistical figures, data graphics, and other forms of presentation intended to persuade by impressing and overwhelming a reader or listener, with a blatant disregard for truth and logical coherence… Our aim in this course is to teach you how to think critically about the data and models that constitute evidence in the social and natural sciences.” Can I get an “amen?”

References:

T. Goertzel, Belief in Conspiracy Theories, Political Psychology 15, 731 (1994), https://www.researchgate.net/publication/270271929_Belief_in_Conspiracy_Theories

K.M. Douglas, et al., Understanding Conspiracy Theories, Advances in Political Psychology 40, Suppl. 1, 3 (2019), https://onlinelibrary.wiley.com/doi/epdf/10.1111/pops.12568

Z. Kunda, The Case for Motivated Reasoning, Psychological Bulletin 108, 480 (1990), http://www2.psych.utoronto.ca/users/peterson/psy430s2001/Kunda%20Z%20Motivated%20Reasoning%20Psych%20Bull%201990.pdf

J. Edelson, A. Alduncin, C. Krewson, J.A. Sieja and J.E. Uscinski, The Effect of Conspiratorial Thinking and Motivated Reasoning on Belief in Election Fraud, Political Research Quarterly 70, 933 (2017), http://www.joeuscinski.com/uploads/7/1/9/5/71957435/uscinski_et_al._apsa_conspiracy_submission.pdf

A.M. Enders and S.M. Smallpage, Polls, Plots and Party Politics: Conspiracy Theories in Contemporary America, in J.E. Uscinski (Ed.), Conspiracy Theories and the People Who Believe Them (Oxford University Press, 2018) pp. 298-318, https://www.researchgate.net/publication/329819138_Polls_Plots_and_Party_Politics_Conspiracy_Theories_in_Contemporary_America

J.E. Uscinski, A.M. Enders, C. Klofstad and M. Seelig, Why Do People Believe COVID-19 Conspiracy Theories?, Harvard Kennedy School Misinformation Review (April 28, 2020), https://www.researchgate.net/publication/340993241_Why_do_people_believe_COVID-19_conspiracy_theories

D.G. Young, I Was a Conspiracy Theorist, Too, Vox (May 15, 2020), https://www.vox.com/first-person/2020/5/15/21258855/coronavirus-covid-19-conspiracy-theories-cancer

J.E. Uscinski and J.M. Parent, American Conspiracy Theories (Oxford University Press, 2014), https://www.amazon.com/American-Conspiracy-Theories-Joseph-Uscinski/dp/0199351813

A. Cichocka, M. Marchlewska, A. Golec de Zavala and M. Olechowski, “They Will Not Control Us:” Ingroup Positivity and Belief in Intergroup Conspiracies, British Journal of Psychology 107, 556 (2016), https://onlinelibrary.wiley.com/doi/10.1111/bjop.12158

A. Bessi, et al., Trend of Narratives in the Age of Misinformation, PLoS ONE 10, e0134641 (2015), https://journals.plos.org/plosone/article/file?id=10.1371/journal.pone.0134641&type=printable

M.M. Kircher, Can You Believe YouTube Caused the Rise in Flat-Earthers?, New York Magazine (Feb. 19, 2019), https://nymag.com/intelligencer/2019/02/can-you-believe-youtube-caused-the-rise-in-flat-earthers.html

M.J. Wood and K.M. Douglas, “What About Building 7?” A Social Psychological Study of Online Discussion of 9/11 Conspiracy Theories, Frontiers in Psychology 2013, 4:409, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3703523/

K. Faasse, C.J. Chatman and L.R. Martin, A Comparison of Language Use in Pro- and Anti-Vaccination Comments in Response to a High-Profile Facebook Post, Vaccine 34, 5808 (2016), https://www.sciencedirect.com/science/article/pii/S0264410X16308428

https://en.wikipedia.org/wiki/Dunning–Kruger_effect

J.-W. van Prooijen, K.M. Douglas and C. De Inocencio, Connecting the Dots: Illusory Pattern Perception Predicts Belief in Conspiracies and the Supernatural, European Journal of Social Psychology 48, 320 (2018), https://onlinelibrary.wiley.com/doi/full/10.1002/ejsp.2331

PatrickJMT, The Origin of Countless Conspiracy Theories, https://www.ted.com/talks/patrickjmt_the_origin_of_countless_conspiracy_theories#t-2265

K.M. Douglas, et al., Someone is Pulling the Strings: Hypersensitive Agency Detection and Belief in Conspiracy Theories, Thinking and Reasoning 22, 57 (2016), https://kar.kent.ac.uk/48402/1/Douglas%20et%20al%20-%20CTs%20and%20HAD.pdf

M. Bruder, et al., Measuring Individual Differences in Generic Beliefs in Conspiracy Theories Across Cultures: Conspiracy Mentality Questionnaire, Frontiers in Psychology 2013, 4:225, https://kops.uni-konstanz.de/bitstream/handle/123456789/23026/Bruder_230264.pdf?sequence=2

J.A. Vitriol and J.K. Marsh, The Illusion of Explanatory Depth and Endorsement of Conspiracy Beliefs, European Journal of Social Psychology 48, 955 (2018), https://onlinelibrary.wiley.com/doi/abs/10.1002/ejsp.2504

M.J. Wood, K.M. Douglas and R.M. Sutton, Dead and Alive: Beliefs in Contradictory Conspiracy Theories, Social Psychological and Personality Science 3, 767 (2012), https://kar.kent.ac.uk/28566/1/Wood%20et%20al%202012%20SPPS.pdf

M. Blagrove, C.C. French and G. Jones, Probabilistic Reasoning, Affirmative Bias and Belief in Precognitive Dreams, Applied Cognitive Psychology 20, 65 (2006), https://onlinelibrary.wiley.com/doi/abs/10.1002/acp.1165

V. Swami, et al., Analytic Thinking Reduces Belief in Conspiracy Theories, Cognition 133, 572 (2014), https://www.sciencedirect.com/science/article/abs/pii/S0010027714001632

G. Pennycook, et al., On the Reception and Detection of Pseudo-Profound Bullshit, Judgment and Decision Making 10, 549 (2015), http://journal.sjdm.org/15/15923a/jdm15923a.pdf

E.W. Dolan, People Who Overestimate Their Political Knowledge Are More Likely to Believe Conspiracy Theories, https://www.psypost.org/2018/06/people-overestimate-political-knowledge-likely-believe-conspiracy-theories-51447

A. Cichocka, M. Marchlewska and A. Golec de Zavala, Does Self-Love or Self-Hate Predict Conspiracy Beliefs? Narcissism, Self-Esteem and the Endorsement of Conspiracy Theories, Social Psychology and Personality Science 7, 157 (2016), https://journals.sagepub.com/doi/abs/10.1177/1948550615616170

N. Dagnall, et al., Conspiracy Theory and Cognitive Style: A Worldview, Frontiers in Psychology 2015, 6:206, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4340140/pdf/fpsyg-06-00206.pdf

R. Imhoff and P. Lamberty, How Paranoid Are Conspiracy Believers? Toward a More Fine-Grained Understanding of the Connect and Disconnect Between Paranoia and Belief in Conspiracy Theories, European Journal of Social Psychology 48, 909 (2018), https://onlinelibrary.wiley.com/doi/abs/10.1002/ejsp.2494

https://en.wikipedia.org/wiki/Schizotypy

J. Pierre, Understanding the Psychology of Conspiracy Theories, https://www.psychologytoday.com/us/blog/psych-unseen/202001/understanding-the-psychology-conspiracy-theories-part-1

E.W. Dolan, Study: Conspiracy Theorists Are Not Necessarily Paranoid, https://www.psypost.org/2018/05/study-conspiracy-theorists-not-necessarily-paranoid-51216

J. Hart and M. Graether, Something’s Going On Here: Psychological Predictors of Belief in Conspiracy Theories, Journal of Individual Differences 39, 229 (2018), https://econtent.hogrefe.com/doi/10.1027/1614-0001/a000268

Diagnostic and Statistical Manual of Mental Disorders, 5th Edition, https://dsm.psychiatryonline.org/

https://www.psychologytoday.com/us/conditions/schizotypal-personality-disorder

https://en.wikipedia.org/wiki/Dopamine

https://srconstantin.wordpress.com/2014/07/02/dopamine-perception-and-values/

W. Schultz, Dopamine Reward Prediction Error Coding, Dialogues in Clinical Neuroscience 18, 23 (2016), https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4826767/

C.T. Dawes and J.H. Fowler, Partisanship, Voting and the Dopamine D2 Receptor Gene, The Journal of Politics 71, 1157 (2009), http://jhfowler.ucsd.edu/partisanship_voting_and_drd2.pdf

http://sitn.hms.harvard.edu/flash/2018/dopamine-smartphones-battle-time/

M.M. Nour, et al., Dopaminergic Basis for Signaling Belief Updates, But Not Surprise, and the Link to Paranoia, Proceedings of the National Academy of Sciences 115, E10167 (2018), https://www.pnas.org/content/pnas/115/43/E10167.full.pdf

https://mentalhealthdaily.com/2015/04/01/high-dopamine-levels-symptoms-adverse-reactions/

https://en.wikipedia.org/wiki/Josh_Billings

D. Jolley and K.M. Douglas, The Effects of Anti-Vaccine Conspiracy Theories on Vaccination Intentions, PLoS ONE 9, e89177 (2014), https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0089177

D. Jolley and K.M. Douglas, Prevention is Better Than Cure: Addressing Anti-Vaccine Conspiracy Theories, Journal of Applied Social Psychology 47, 459 (2017), https://kar.kent.ac.uk/60913/

https://metro.co.uk/2020/05/17/man-who-claimed-coronavirus-hoax-changes-mind-hospital-bed-12715471/

K.S. Deeg, et al., Who Is Changing Their Mind About Global Warming and Why, https://climatecommunication.yale.edu/publications/who-is-changing-their-mind-about-global-warming-and-why/

C.T. Bergstrom and J. West, Calling Bullshit: Data Reasoning in a Digital World, https://callingbullshit.org/index.html

https://debunkingdenial.com/science-denial-and-the-coronavirus/

https://debunkingdenial.com/wellness-fads-part-i-dr-mehmet-oz/

https://debunkingdenial.com/ten-false-narratives-of-climate-change-deniers-part-ii/

https://debunkingdenial.com/wellness-fads-part-iii-deepak-chopra/