March 5, 2025

I. introduction

“It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.” That was said by American humorist Josh Billings in the 19th century. But it’s even more pointedly true today, when misinformation spread on social media has infected so many people who are sure about things that just ain’t so. Widespread belief in conspiracy theories about elections, vaccines, and climate change, among other phenomena, poses serious threats to the health and welfare of not only humans and nations, but even the planet.

But belief in conspiracy theories is hard to crack. As we have reported previously in a post on Conspiracy Theory True Believers, there are three basic psychological syndromes that underlie most conspiracy belief: (1) illusory pattern perception – the tendency to connect random dots; (2) hypersensitive agency detection – the belief that complex events result from secret plots hatched by powerful, malevolent groups; and (3) the Dunning-Kruger effect – severe overestimation of one’s ability to understand complex phenomena. People who share these psychological traits are motivated to believe in conspiracies that align with their existing beliefs. They feel psychologically comforted by membership in a like-minded group that helps them to focus (unmerited) blame for overwhelming, complex events. Because they tend to dwell in echo chambers with like-minded conspiracists, they often also greatly overestimate the fraction of people who share their belief. They often adopt the mantra of “doing your own research,” but their “research” remains confined within their echo chamber. People who attempt to debunk the conspiracies with factual evidence are then often just seen by the believers as part of the conspiracy.

The perception that conspiracy beliefs fulfill some deep-seated psychological need has led to a great deal of speculation that one can never change a conspiracy believer’s mind with facts and well-established counter-evidence. However, recent events are restoring some faith in facts. In our recent addendum to our post on Flat Earth “Theory,” we have noted that at least one prominent long-time Flat Earther, Jeran Campanella, has been convinced to express some new-found skepticism about Earth’s flatness by participating in the “Final Experiment” expedition to Antarctica to observe 24-hour daylight there, in clear contradiction to claims of Flat Earthers. But that expedition was a rather expensive way to change a few minds. Flat Earthers who did not themselves join the expedition are inventing ways to cast doubt on the clear observations.

Recently, researchers Thomas Costello of American University, Gordon Pennycook of Cornell, and David Rand of MIT have found encouraging success with a far less expensive approach to debunking conspiracies: programming an artificial intelligence chatbot to gently lift conspiracy believers at least part way out of their rabbit holes. We will describe their DebunkBot and findings with it in Section II and speculate about the reasons why it may be more successful than human debunkers in Section III, before suggesting how it might be improved and used more widely in Section IV.

II. debunkbot

We have explained the basic training and operation of large language models (LLMs) and AI chatbots in a previous post. We also described there some of the dangers of chatbots spreading false information or hallucinating and making things up as they go. But carefully curated chatbots can also be far more efficient and globally knowledgeable than any human, and Costello, Pennycook, and Rand found – despite their own initial skepticism – that those advantages become very useful in combating conspiracy theories.

The researchers used the LLM GPT-4 Turbo, the model behind OpenAI’s general-purpose chatbot. The enormous text database on which GPT-4 is trained contains detailed discussions of the arguments — events, anomalies, alleged flaws, etc. — that “convince” believers in widespread conspiracies, but also the evidence for non-conspiratorial explanations for every one of these arguments. Costello interfaced GPT-4 with Qualtrics survey software to enable both long-form chats with participants and the participants’ before and after self-assessments of the level of their belief in their chosen conspiracy. DebunkBot acts like an extremely knowledgeable clinical psychologist: it gets the user to talk in depth about their beliefs and doubts, carefully summarizes and acknowledges the user’s beliefs, and then refutes each aspect of conspiracy belief painstakingly, with extensive facts in a friendly, non-judgmental way.

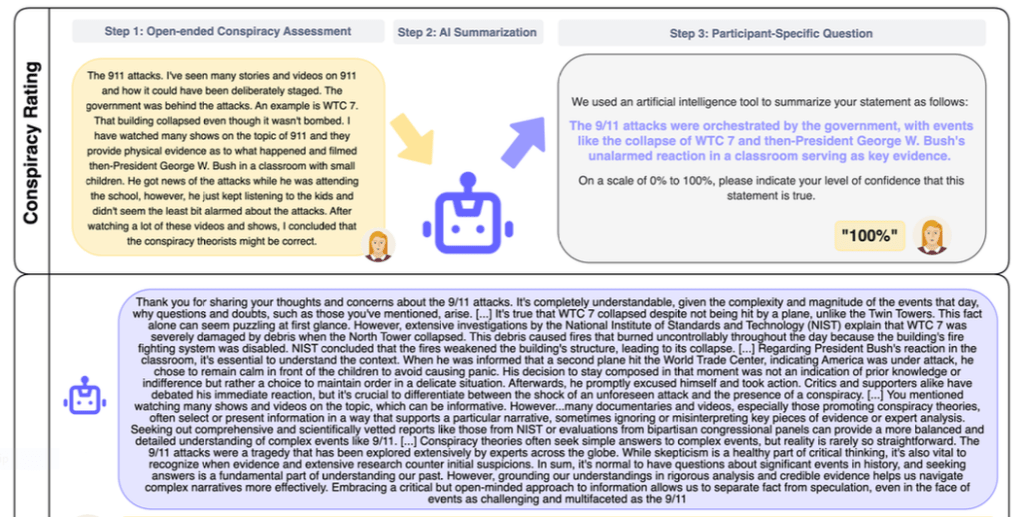

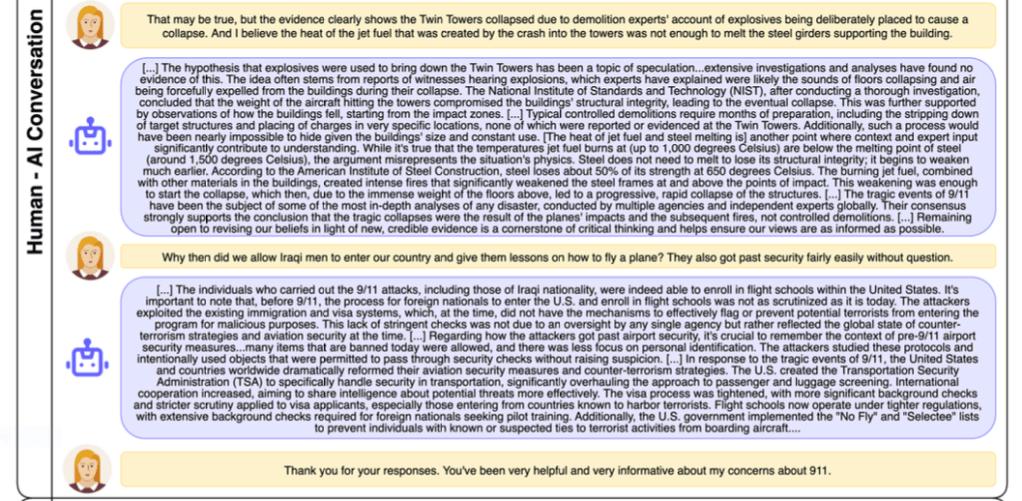

The researchers recruited 2190 conspiracy believers to engage with DebunkBot about one of their preferred conspiracies. Each dialogue began by asking the user to provide an open-ended description of what the user finds convincing about a single conspiracy theory. DebunkBot then offers a pithy summary of what the user wrote and asks the user to state their level of confidence, on a scale from 0 to 100%, in the conspiracy theory stated in that pithy summary. The chatbot then thanks the user for sharing and proceeds to offer evidence refuting each point raised in the user’s opening statement. The user then has a chance to respond to that refutation and possibly to bring up further arguments. DebunkBot then offers a further refutation responding specifically to the user’s response. One more back-and-forth between user and chatbot follows. After these three rounds, DebunkBot restates its initial summary of the user’s opening comments and asks again for the user to express their level of confidence in the conspiracy theory in the wake of the chat. An example of the three stages of dialog, taken from the DebunkBot scientific paper, is shown in Fig. II.1.

Results obtained from the 2190 participants are summarized in Fig. II.2. The chosen participants began with an average confidence level of 80% in their chosen conspiracy theory. After the AI chat that average level reduced to 64%, representing a fractional reduction of 20% in conspiracy belief. A quarter of the participants actually went from predominant belief (confidence above 50%) to predominant disbelief (below 50%) in their chosen conspiracy. Furthermore, that reduction had staying power, still showing up two months after the AI chat. The reduction in conspiracy confidence was significant even for those participants who began with 100% confidence in the conspiracy. Consistent results were observed “across a wide range of conspiracy theories, from classic conspiracies involving the assassination of John F. Kennedy, aliens, and the illuminati, to those pertaining to topical events such as COVID-19 and the 2020 US presidential election.” The AI itself was judged to be highly accurate: “when a professional fact-checker evaluated a sample of 128 claims made by the AI, 99.2% were true, 0.8% were misleading, and none were false.” No significant reduction in conspiracy belief was observed in control studies where the AI discussed topics irrelevant to the conspiracy after the user first stated his or her conspiracy beliefs and reasons.

The results in Fig. II.2 may seem moderate but they are very encouraging for a first AI attempt in a field where the prevailing wisdom says that facts don’t matter at all to conspiracy believers. In a follow-up study that has not yet been published, the researchers tried different approaches to the AI chat, from which they concluded that “conspiracy theorists are receptive to evidence, but it needs to be the right kind of evidence, and it’s not the AI’s empathy or understanding that drives the effect.” Conspiracy believers who consider themselves as doing their “own research” often invest considerable psychological effort in constructing castles in the sky and their faith suffers significant setbacks when they are confronted with dramatic evidence that their construction has fatal flaws. This is what we saw at play also for the Flat Earthers who participated in the Final Experiment in Antarctica.

III. why does debunkbot work so much better than human debunkers?

In order to understand what it’s like to engage a conspiracy theory true believer one-on-one, imagine prosecuting a case against a guilty person defended by an expert lawyer in a court with no judge and no rules of order. The defense lawyer does not attempt to prove his client’s innocence but rather throws out many, sometimes convoluted, hypothetical alternative accounts of the crime and doubts about evidence, in the hopes that the prosecution will fail to convincingly refute at least one of them, preserving the shadow of a doubt about guilt. Conspiracy theorists often engage in a debating technique known colloquially as the “Gish Gallop” (although we also use the alternative label of “Trump Torrent”). The technique is named for Duane Gish, an American biochemist turned Young Earth Creationist who, in debates about evolution, would typically “run on for 45 minutes or an hour, spewing forth torrents of error that the evolutionist hasn’t a prayer of refuting in the format of a debate.” In particular, practiced conspiracy theorists can often intuit where their interlocutor’s ignorance on the subject lies and burrow in on some obscure points to put the other on the defensive.

Human interlocutors have a difficult time dealing with the Gish Gallop approach because they are typically not aware of or able to instantly refute (or even recall) every twist and turn the conspiracy believer has thrown at them. DebunkBot has significant advantages in structure, preparation, and instant recall:

- The structure of the AI chat works against the Gish Gallop because it is much more cumbersome to execute by typing in responses on a computer than it is by hurling claims verbally in a debate.

- As opposed to most sane human evidence presenters, DebunkBot has burrowed down and explored all aspects of the rabbit hole, down to the very bottom. It is thus not thrown by any argument a conspiracy believer user may launch.

- The AI furthermore has assimilated all of the effective evidence-based refutations of the conspiracist’s possible claims and is ready to present them in detail with minimal delay.

- The training of the AI on subjects related to conspiracy theories appears to be quite robust, given the very positive review of its answers by an independent fact-checker.

- Humans tend to consider computers as neutral entities. So a conspiracy believer is far less likely to try ad hominem attacks of political bias or ignorance against a computer than against a human discussion partner.

- The AI is a good and imperturbable listener. It acknowledges the user’s arguments without getting flustered at their unreasonable or paranoid nature or by their number. It offers refutations calmly, without judgment, and without ever getting off topic. These are difficult qualities for a human debunker to maintain in all cases.

- The limited nature of the DebunkBot chat probably helps as well. There is not opportunity for a user to argue for so long that they eventually circle back to their original arguments, whose debunking they’ve since forgotten. Asking for a confidence level assessment after only three rounds of discussion seems about the right moment.

DebunkBot is thus, for now, an encouraging first step in adapting AI to the positive goal of helping conspiracy believers out of their rabbit holes. It is a proof of principle that facts presented in a careful way to address all issues a user raises can reduce confidence that conspiracies are true. The challenge is getting confirmed believers to engage with DebunkBot in the first place.

IV. next steps

One can imagine a number of further research studies that can be used to improve DebunkBot’s efficiency and effectiveness. Can the AI’s responses in the chat be made more succinct by focusing just on factual evidence? Is there an optimal number of rounds in the discussion for maximizing confidence loss? Can the range of conspiracies treated be expanded, even to include politically motivated disagreements, for example, on the validity of the 2020 U.S. Presidential election or the effectiveness of vaccines or the level of threat posed by climate change? In cases of conspiracies with political implications, are there changes to the chat protocol that can improve effectiveness, e.g., having the AI ask how much the user’s confidence level is influenced by political leaders and affiliation and offering counter-arguments from other members of the same party? Is there any gain in effectiveness by having users go through a second round of DebunkBot chat some months after the first round, akin to a booster vaccine?

But a bigger challenge resides in expanding the number of users. David Rand has suggested: “You could go to places where conspiracy theorists hang out, like Reddit, and just post a message encouraging people to talk with the AI. Or you could phrase it as adversarial: Debate the AI or try to convince the AI that you’re right.” It might be enough to get a number of influencers in these conspiratorial chat rooms to engage with DebunkBot, even if it required paying them a nominal amount for their engagement. They might go back to their chat rooms and report: “You know a funny thing happened when I tried to convince the AI…” Perhaps doubts implanted by DebunkBot in selected users can spread across social media as virally as outlandish conspiracy theories do in the first place. It may also be useful in educational classes that discuss controversial issues to direct students who raise conspiracy-based objections to try to convince DebunkBot of their view and then to report back to the class about their experience.

Now that internet searches generally lead to an AI-generated summary response, one could try to get the tech giants to update their software so that a query related to a conspiracy theory automatically generates an AI response that provides some facts debunking the conspiracy, along with an invitation to engage with DebunkBot or an equivalent AI program. Of course, such a change would likely also prompt those who profit by spreading conspiracies to inject their own AI programs to respond with “alternative facts.” Indeed, the greatest danger of AI may be its susceptibility to weaponization by folks who intend to divide citizens into two groups who believe in completely different sets of “facts.” The usefulness of AI depends completely on the curation of the datasets used for training.

But now that this work has established that facts can change even conspiratorial minds, we can indulge our ultimate fantasy: an AI system that fact-checks political candidates engaging in a debate in real time, given equal rebuttal time even against those debaters that engage in a Trump Torrent. A boy can dream…

references:

T.H. Costello, G. Pennycook, and D.G. Rand, Durably reducing conspiracy beliefs through dialogues with AI, Science 385, Issue 6714 (2024), https://www.science.org/doi/10.1126/science.adq1814

DebunkBot: Conspiratorial Conversations, https://www.debunkbot.com/

D. Walsh, MIT Study: An AI Chatbot Can Reduce Belief in Conspiracy Theories, https://mitsloan.mit.edu/ideas-made-to-matter/mit-study-ai-chatbot-can-reduce-belief-conspiracy-theories

P. Jacobs, AI chatbot shows promise in talking people out of conspiracy theories, Science, Sept. 12, 2024, https://www.science.org/content/article/ai-chatbot-shows-promise-talking-people-out-conspiracy-theories

D. McRaney, An interview with the scientists who created Debunkbot, an AI that reliably reduces belief in conspiracy theories via back-and-forth chat, https://youarenotsosmart.com/2024/10/29/yanss-299-an-interview-with-the-scientists-who-created-debunkbot-an-ai-that-reliably-reduces-belief-in-conspiracy-theories-via-back-and-forth-chat/

DebunkingDenial, Conspiracy Theory True Believers, https://debunkingdenial.com/conspiracy-theory-true-believers-part-ii-the-mindset-of-true-believers/

C. O’Mahony, M. Brassil, G. Murphy, and C. Linehan, The Efficacy of Interventions in Reducing Belief in Conspiracy Theories: A Systematic Review, PLoS ONE 18, e0280902 (2024), https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0280902

DebunkingDenial, Flat Earth “Theory,” https://debunkingdenial.com/flat-earth-theory-part-i/

The Final Experiment, https://www.youtube.com/@The-Final-Experiment

Thomas Costello, https://www.thcostello.com/

Gordon Pennycook, https://gordonpennycook.com/

Wikipedia, David G. Rand, https://en.wikipedia.org/wiki/David_G._Rand

DebunkingDenial, Artificial Intelligence: Is it Smart? Is it Dangerous?, https://debunkingdenial.com/artificial-intelligence-is-it-smart-is-it-dangerous-part-ii-chatbots-and-the-path-to-artificial-general-intelligence/

OpenAI, ChatGPT, https://openai.com/chatgpt/overview/

Qualtrics, https://uits.iu.edu/qualtrics/index.html

E. Scott, Debates and the Globetrotters, https://www.talkorigins.org/faqs/debating/globetrotters.html